Social Networks. This is not a concept that came to humanity with the emergence of Facebook, MySpace, or Twitter. The Social Network is an essential part of the fabric that defines our very culture, and it stretches back many millennia to the beginnings of our use of symbolism.

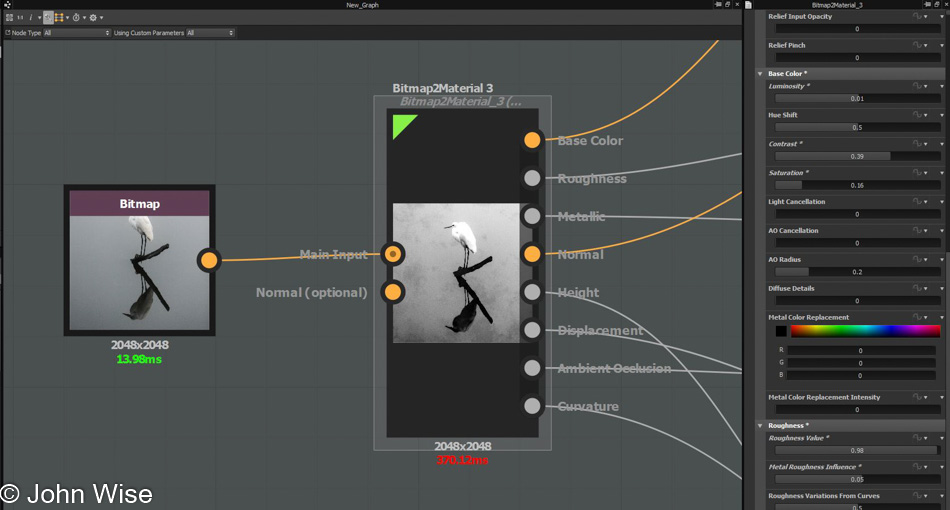

In caves, on beads, and on cliff walls, we find markings, images, and other traces of messages left for others. This form of artistic communication transcends space and time and is some of the earliest proof that humanity was building a primitive social network. You see when someone leaves “graffiti” for others to come across at some future point, they have left an implicit message, “I existed, and I leave you this clue to my having been here before you.” This brings the visitor into the social web of having shared the same space with the original poster.

As society has evolved, we strove for better communication that would give more effective insight as to who we were. Using cuneiform symbols and, later on, hieroglyphs, we started leaving exacting details about our history and accomplishments. Sculpting arrived about 2300 years ago, while woodblock printing came to us 1,100 years after that. As technology improved, we witnessed the 15th-century arrival of the printing press using movable type, but still, we would need another few hundred years for the steam engine to help launch mass production and give us the ability to print lots of newspapers and books that would in-turn allow greater sharing and distribution of information.

These accomplishments were essential in allowing us humans to share knowledge as it pertains to history and for the creation of literature that would allow us to dream forward about what the future might look like. We were well on the road to a global social network.

Approaching the 21st century we witnessed an explosion of communication technology. Radio, movies and TV, the phone, the Internet, and, more recently, the smartphone have all been instrumental in networking our globe. The people that we reach out to in far away places, sharing conversation, photos, and cat memes, form our new social network – it is immediate and spontaneous. Often, though, our view is clouded by brand awareness by defining the social network as something that resembles Facebook.

Bruno Latour, in his book, “Reassembling the Social – An Introduction to Actor-Network-Theory,” attempts to bring clarity to this complex idea by letting us know that the social network is not simply the closed system of interactions between known actors in a particular location. The network is complex and is now likely impossible to define as the breadth of global inputs from our modern communication and entertainment system is working dynamically and chaotically to connect people independent of systems of association and geographic proximity.

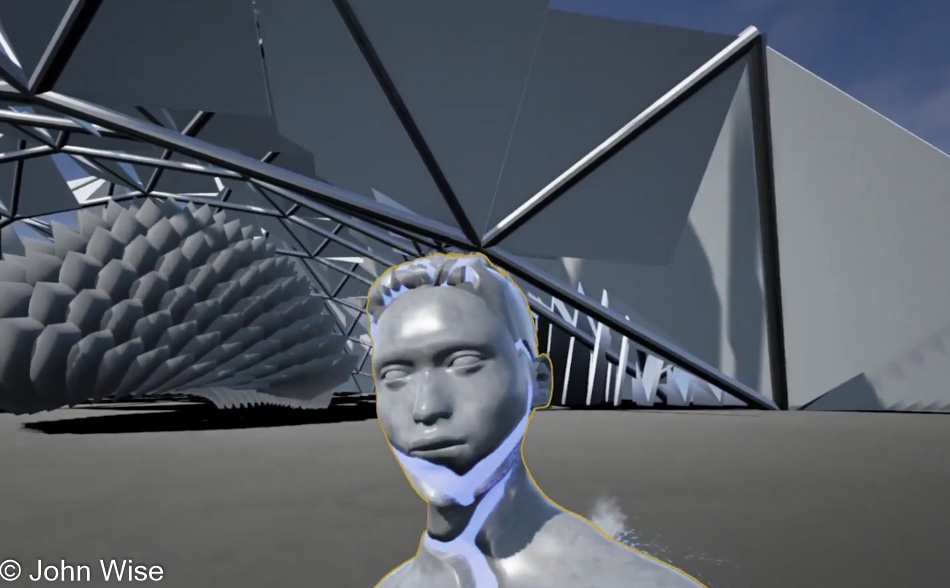

This is an important concept because as we humans start to explore Virtual Reality, we are closing the final gap between information and experience. From early history leading up to today, we have been observing the world and building experiences based on our physical location and access to scarce resources. This required our proximity to or observation of landscapes, artifacts, images, videos, and lectures about the particular subject matter. From this, societies and organizations would arise, allowing like-minded participants to share their particular curiosity.

With the advent of VR, all are welcome to visit the cave of the mysterious image. Every one of us will be invited to interpret newly discovered hieroglyphs from places that only exist in the imaginations of those sharing their art. As experiences are a large part of how people illuminate culture and history, we can project that the form and substance of global communication will need to change as it responds to millions of new vantage points brought on by VR. The shared environment will no longer be locked to a geographic locality or certainty about cultural perspectives. Our written and visual languages used to interpret this new social network will need to evolve as rapidly as the art and tools that are producing this shift.

It is in our nature to leave a mark, be it momentarily in the dirt, like a handprint on a cave wall, maybe with pigments on a canvas, or as little letters printed on the screen before you. What will this all mean when our mark is left upon this new type of reality? What kind of culture and history will we create? Where will our art be found by future generations who explore the places we have been while immersed in Virtual Reality? How will they interpret our social networks that will have continued to reach out across space and time?