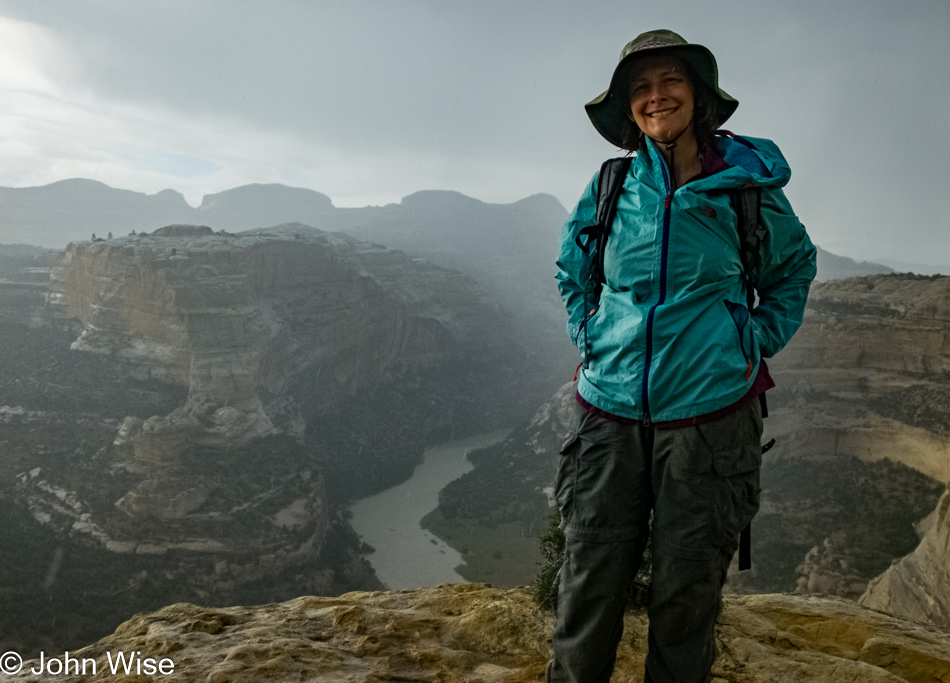

Well, this is an unmitigated disaster as it is now NINE years after this trip was taken that I’m sitting down to post something, anything, about the last two days of our rafting trip down the Yampa River through the Dinosaur National Monument that started in Colorado and is approaching the confluence of the Green and Yampa Rivers just ahead, still in Colorado. The giant rock face is part of Steamboat Rock. Back in 2018, I left a note on Days 2 and 3 that something went wrong in 2014 because after posting about Day 1 soon after our whitewater adventure, something interrupted my blogging, leaving a four-year gap between posting Day 1 and the next two days. The problem is, after pulling those two days out of the air with a promise that I was about also to include Days 4 and 5, I apparently fell off the raft and floated down the stream of oblivion until May 11, 2023. Now, I have a lot of nothing aside from these photos that documented the visuals of our journey; the details are long gone, and I curse myself for it.

The fact of the matter is back in 2014; I had already embarked on another adventure that involved a deep dive into virtual reality. Things were likely moving fast around raising money, and I never had time to look back. Then, in 2018, I was gathering distance between that VR project and its failure when I turned to repair some long-neglected aspects of the blog, but before I could get very serious about things, Caroline and I were on our way to Europe for a few weeks. Obviously, I then faced the daunting task of blogging about our jaunt into Germany, France, Italy, Slovenia, Hungary, and Austria.

Look attentively at this photo, and you can see the line delineating the merger of two rivers with the muddy Yampa on the right and the relatively clear waters of the Green River on the left.

Well, here we are with shareable information, as what is known is that Caroline took out an inflatable little kayak-like boat called a Ducky. There’s no doubt I would have been terrified that she’d crash into some major whitewater and be eaten by the river; obviously, that never happened. With the Green River being dominant, the Yampa has reached its conclusion as a tributary and is now but a memory.

These types of views will forever remain in the realm of nearly incomprehensible as to how the uplift, folding, and movement of our planet’s crust works over time. Intellectually, I have some minor understanding of this area of geology, but the fluid nature of rocks and their reorganization at the surface doesn’t mean it all makes perfect sense.

Though I ponder the jagged, almost labyrinthian nature of these forms through a filter of uncertainty, I’m no less enchanted with them as I am with the ocean, the sky, or the forest.

This is the Scotsman William “Willie” Mather, a friend of Frank and Sarge’s who’ll become a friend of Caroline and me too.

There are 23 distinct exposed rock layers here in the Dinosaur National Monument, and I can’t easily identify even one of them; this is what happens when you tune out, don’t take notes, and then let eons pass before tending to excavate memories.

We’ve left the river at Jones Hole Creek and are out for a hike. We also entered Utah just minutes before our arrival on this beautiful day.

Our hike north along Jones Hole Creek will take us about 2 miles upstream.

According to one post about these pictographs along the creek, they are thought to be nearly 7,000 years old.

Information is thin regarding the area so take this with a grain of salt.

A little-known fact about Ely Falls is that if there are a number of people in your group, there is a spot above the falls where a bunch of you can lay in the water and block the flow until it starts to go over you, then, everyone leaps up simultaneously and a rush of water spills over the falls absolutely drenching the person leaning against the rocks. Due to a bum knee that was slowing me down the entire trip, we didn’t arrive in time to witness Willie losing his pants as the water rushed over him.

And the hike back to the river.

More river miles before pulling into camp for the night.

You may not have known this about Caroline, but she’s a Class-A tent-putter-upper.

Along the way, we were talking with Frank, Sarge, Jill, and Willie about our trip down the Alsek in Alaska a couple of years before, and on this afternoon, after Frank and Sarge had taken the bait, Frank hurt his big toe proving to him and Sarge that they’d have to work on Sarge’s wife to let him chaperone his Marine buddy and after much consideration, we all felt that something like this was just the kind of convincing that would work on her. Five years later, for Sarge’s 70th birthday, that’s what we all did.

The challenges (shenanigans) the guides come up with for entertainment are not always cultural, historical, or scientific, at times they are inexplicable. Interpret this fun game any way you desire.